Intro

As of March 2025, it came headlines that

The real matter

What Actually is 10X faster?

What’s actually getting faster is the TypeScript compiler, not the TypeScript language itself or the JavaScript’s runtime performance. Your TypeScript code will compile faster, but it won’t suddenly execute 10x faster in the browser or Node.js.

It’s a bit like saying,

“We made your car 10x faster!“

and then clarifying that they only made the manufacturing process faster—the car itself still drives at the same speed. It’s still valuable, especially if you’ve been waiting months for your car, but not quite what the headline suggests.

Benchmarks Shared

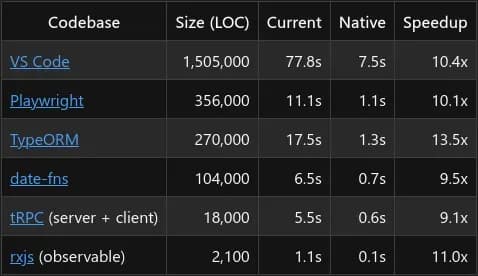

Anders Hejlsberg’s announcement showcased impressive numbers:

Let’s be honest: whenever you see a “10x faster” claim, you should view it with healthy scepticism. My first reaction was, “OK, what did they do suboptimal before?” Because 10x improvements don’t materialize from thin air—they typically indicate that something wasn’t made well enough in the first place. Be it implementation or some design choices.

Architecture of node JS

Node.js is built on Google’s V8 JavaScript engine, which is the same high-performance engine that powers Chrome. The V8 engine itself is written in C++, and Node.js essentially provides a runtime environment around it. This architecture is key to understanding Node.js performance:

- V8 Engine: Compiles JavaScript to machine code using Just-In-Time (JIT) compilation techniques

- libuv: A C library that handles asynchronous I/O operations

- Core Libraries: Many written in C/C++ for performance. That’s also why there are few significant performance differences between performance of database connectors used in Node.js, Go and Rust.

- JavaScript APIs: Thin wrappers around these native implementations

When people talk about Node.js, they often don’t realize they’re talking about a system where critical operations are executed by highly optimized C/C++ code. Your JavaScript often just orchestrates calls to these native implementations.

Fun fact: Node.js has been among the fastest web server technologies available for many years. When it first appeared, it outperformed many traditional threaded web servers in benchmarks, especially for high-concurrency, low-computation workloads. This wasn’t an accident—it was by design.

Memory-Bound vs. CPU-Bound#

For typical web applications, most of the time is spent waiting for these I/O operations to complete. A typical request flow might be:

- Receive HTTP request (memory-bound)

- Parse request data (memory-bound)

- Query database (I/O-bound, mostly waiting)

- Process results (memory-bound)

- Format response (memory-bound)

- Send HTTP response (I/O-bound)

Here’s what many people miss: I/O-intensive operations in Node.js work at nearly C-level speeds. When you make a network request or read a file in Node.js, you’re essentially calling C functions with a thin JavaScript wrapper.

This architecture made Node.js revolutionary for web servers. When properly used, a single Node.js process can efficiently handle thousands of concurrent connections, often outperforming thread-per-request models for typical web workloads.

Compilers: The CPU-Intensive Beast

A compiler is practically the poster child for CPU-intensive workloads. It needs to:

- Parse source code into tokens and abstract syntax trees

- Perform complex type-checking and inference

- Apply transformations and optimizations

- Generate output code

These operations involve complex algorithms, large memory structures, and lots of computation—exactly the kind of work that challenges JavaScript’s execution model.

For TypeScript specifically, as the language grew more complex and powerful over the years, the compiler had to handle increasingly sophisticated type checking, inference, and code generation. This progression naturally pushed against the limits of what’s efficient in a JavaScript runtime.

Same Code, Different Execution Model#

Anders Hejlsberg said they evaluated multiple languages, and Go was the easiest to port the code base into. The TypeScript team has apparently created a tool that generates Go code, resulting in a port that’s nearly line-for-line equivalent in many places. That might lead you to think the code is “doing the same thing,” but that’s a misconception.

In JavaScript:

- All code runs on a single thread with an event loop,

- Long-running operations need to be broken up or delegated to worker threads,

- Concurrent execution requires careful handling to avoid blocking the event loop.

In Go:

- Code naturally runs across multiple goroutines (lightweight threads),

- Long-running operations can execute without blocking other work,

- The language and runtime are designed for concurrent execution.

Writing truly performant JavaScript means embracing its asynchronous nature and event loop constraints—something that becomes increasingly challenging in complex codebases like a compiler.

But What About Worker Threads?

”Doesn’t Node.js have Worker Threads now? Couldn’t they have used those instead of migrating to Go?”

Each worker runs in its own V8 instance with its own JavaScript heap, allowing true parallelism. Workers communicate with the main thread (and each other) by sending and receiving messages, which can include transferring ownership of certain data types to avoid copying.

Why Weren’t Worker Threads the Solution for TypeScript?#

So why might the TypeScript team have chosen to Go over retrofitting the existing codebase with worker threads? That we don’t know, but there are several plausible reasons:

- Legacy Codebase Challenges: The TypeScript compiler has been developing for over a decade. Retrofitting a large, mature codebase designed for single-threaded execution with a multi-threaded architecture is often more complex than starting fresh. Workers communicate primarily through message passing. Restructuring a compiler to operate this way requires fundamentally reimagining how components interact. The video showed that even single-threaded Go was faster, so the code would probably still require modification to better take care of event loop characteristics.

- Data Sharing Complexity: Workers have limited ability to share memory. A compiler manipulates complex, interconnected data structures (abstract syntax trees, type systems, etc.) that don’t neatly divide into isolated chunks for parallel processing.

- Performance Overhead: While worker threads provide parallelism, they come with overhead. Each worker has its own V8 instance with separate memory, and data passed between threads typically needs to be serialized and deserialized. They are not as lightweight as threads or goroutines.

- Timeline Mismatch: When the TypeScript compiler was designed and implemented (around 2012), worker threads didn’t exist in Node.js. The architecture decisions made early on would have assumed a single-threaded model, making later parallelization more difficult.

- Dead-End Assessment: The team may have concluded that even with worker threads, JavaScript would still impose fundamental limitations for their specific workload that would eventually become bottlenecks again.

- Skill Set Alignment: The decision may have partly reflected organizational expertise and strategic alignment with other developer tools.

Go’s Approach vs. Worker Threads#

Go’s approach to concurrency offers several advantages over Node.js worker threads for compiler workloads:

- Lightweight Goroutines: Goroutines are extremely lightweight (starting at ~2KB of memory) compared to worker threads (which require a separate V8 instance), making fine-grained parallelism more practical.

- Shared Memory Model: Go allows direct shared memory between goroutines with synchronization primitives, making working with complex, interconnected data structures easier.

- Language-Level Concurrency: Concurrency is built into Go at the language level with goroutines and channels, making parallel code more natural to write and reason about.

- Lower Overhead Communication: Communication between goroutines is significantly more efficient than the serialization/deserialization required for worker thread communication.

- Mature Scheduler: Go’s runtime includes a mature, efficient scheduler for managing thousands of goroutines across available CPU cores.

Also, I think the migration to Go wasn’t just about “multithreading vs. single-threading” but about adopting a programming model where concurrency is a first-class concept, deeply integrated into the language and runtime.

The Evolution Problem

This is a classic example of how successful software often faces scaling challenges that weren’t anticipated in its early design. As the TypeScript compiler became more complex and was applied to larger codebases, its JavaScript foundations became increasingly restrictive.

Why Go Instead of Rust?

Rust was a strong contender, but presented significant challenges due to TypeScript’s architecture. Hejlsberg provides revealing details about the compiler’s internals:

“All of our data structures are heavily cyclic. We have ASTs or abstract syntax trees with both child and parent pointers. We have symbols with declarations that reference AST nodes that reference back to symbols. We have types that go around in circles because they’re recursive.”

These cyclic structures clash fundamentally with Rust’s ownership model and borrow checker. As Hejlsberg explained: “Trying to unravel all of that would just make the job of moving to native insurmountably larger.”

What Makes Go the Right Choice?

Go offered the right balance of:

- Automatic garbage collection

- Native performance

- Simpler learning curve from JavaScript

- Good support for inline data structures

- Concurrency with shared memory

What do we get?

The Go-based compiler delivers:

- 10x faster type checking

- 50% reduction in memory usage

- Native concurrency support

These improvements come from two key advantages:

- Native code execution: ~3.5x speedup compared to JavaScript

- Shared-memory concurrency: Another ~3x speedup by utilizing multiple CPU cores